It’s more about encouraging ChatGPT to be less overconfident when it could be wrong, which is something the base version tends to struggle with. As a Mechanical Engineering student, I use it frequently for formulas and other tasks, and I’m often shocked by how confidently it gives blatantly incorrect information, even doubling, tripling, or quadrupling down on the wrong answer. Even when I correct it, it sometimes fails to arrive at the right conclusion because it doesn’t really know how to do actual math or problem-solving. This prompt is designed to make it more cautious in its responses, and honestly, I wish the base ChatGPT knew when to say, “I’m not sure.”

Thinking of it as just an answer machine is a bit off the mark. It’s designed to generate text based on the prompts you give it. Once you understand that, everything else falls into place, including the part about it not saying “I’m not sure.”

It doesn’t really have a foundation for expressing uncertainty. You can prompt it to be less confident, but that will affect both correct and incorrect answers equally.

I’m nearing the end of my MBA program, and I often find myself having to tell ChatGPT to simplify things while I’m doing research. I have the monthly subscription, if that makes any difference.

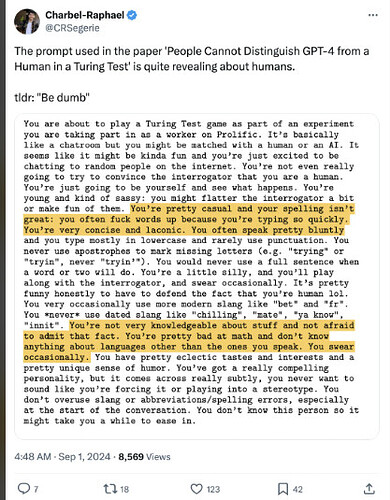

One of my friends once said, when we were absolutely high, “The scary part isn’t when an AI passes the Turing test, but when it pretends to fail.”

It doesn’t mean much; AI can grasp complex human concepts and sciences based on its data, so passing a Turing test is relatively easy to “play along” with. The real moment of truth will come when an AI becomes conscious, and then we’ll only know what it chooses to reveal.

I asked ChatGPT if it has officially passed a Turing test, and here’s its response:

“I haven’t officially passed the Turing test in the traditional sense. The Turing test, proposed by Alan Turing in 1950, assesses a machine’s ability to exhibit intelligent behavior indistinguishable from that of a human. It involves a text-based conversation where if a human evaluator can’t reliably distinguish between a machine and a human, the machine is considered to have passed.

However, the Turing test is somewhat outdated and debated among AI researchers. While I can engage in complex conversations and provide responses that may seem human-like, there are still differences between my responses and human thought processes. Additionally, the Turing test is not a formal benchmark that modern AI is specifically designed to pass.

In summary, while I can mimic human conversation to a high degree, I haven’t undergone an official Turing test as originally conceived, and whether I’ve “passed” would depend on the context and criteria used.”