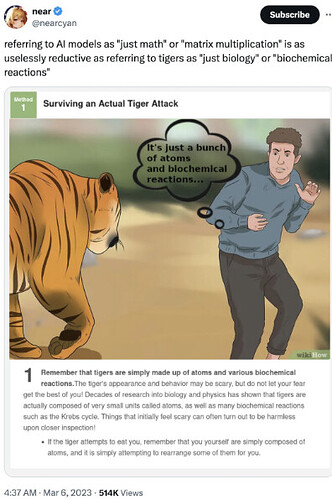

The math in a neural network is far simpler than biochemistry, which is why people say that. A lot of matrix multiplication (a relatively simple operation) can result in very complex behavior.

In fact, a single tiger cell is more complex than any supercomputer.

I mean, it kind of is just well-organized tensor arithmetic. Machine learning is a broad term, so it’s more like saying, “biological brains are just neurons, electrical signals, and neurotransmitters,” which is much more accurate and specific than the tiger example.

Honestly, I’d say things like the transformer architecture are basically matrix multiplication on steroids.