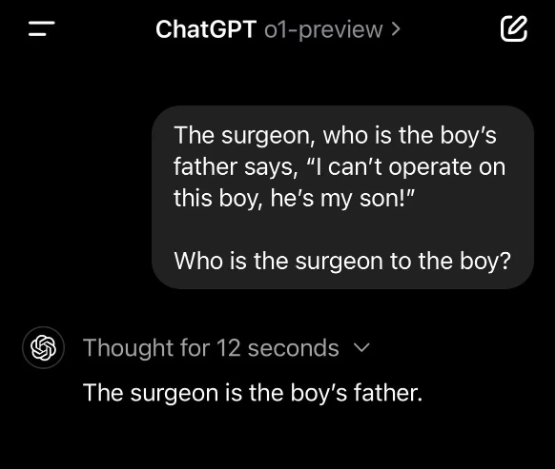

You all need to re-examine the question because it’s quite different from the usual subconscious-sexism riddle we’re familiar with.

The answer is simply ‘the surgeon is his father,’ because the question explicitly states that the surgeon is the father. It’s like saying, ‘Hi, I’m John; now try to guess my name.’

Since the answer is already given in the question, there’s no reason for ChatGPT to introduce the concept of two fathers, unless the original post is intentionally trying to provoke outrage.

That’s exactly what I’ve been trying to explain.

It’s genuinely just a question to test the model’s logic, nothing more.

Okay, I asked the same question, and it answered correctly.

I suspect that the reason most models struggle with these kinds of questions is overfitting. During training, they encounter these questions in numerous contradictory forms, which becomes their Achilles’ heel. I tested this by altering the problem slightly while keeping the structure the same, like in this example: https://chatgpt.com/share/66e35737-97f4-800a-a93e-da1e66349bfa. From my testing, the model seems quite robust to the underlying problem. The real issue seems to be with the way the question is phrased, not the content itself, in my opinion.